The human face is a marvel of expression. In a fraction of a second, it can convey joy, doubt, confusion, or delight without a single word being spoken. For years, we’ve believed this nuanced language of emotion was exclusively human. So, how is it possible that an AI can learn to understand it with such precision?

The answer isn’t magic; it’s a blend of brilliant psychological research and powerful machine learning. The secret lies in first teaching the AI the fundamental “alphabet” of facial expressions before it can learn to read the “words” of emotion. This foundational alphabet is a scientific method that has revolutionized how we decode the human face.

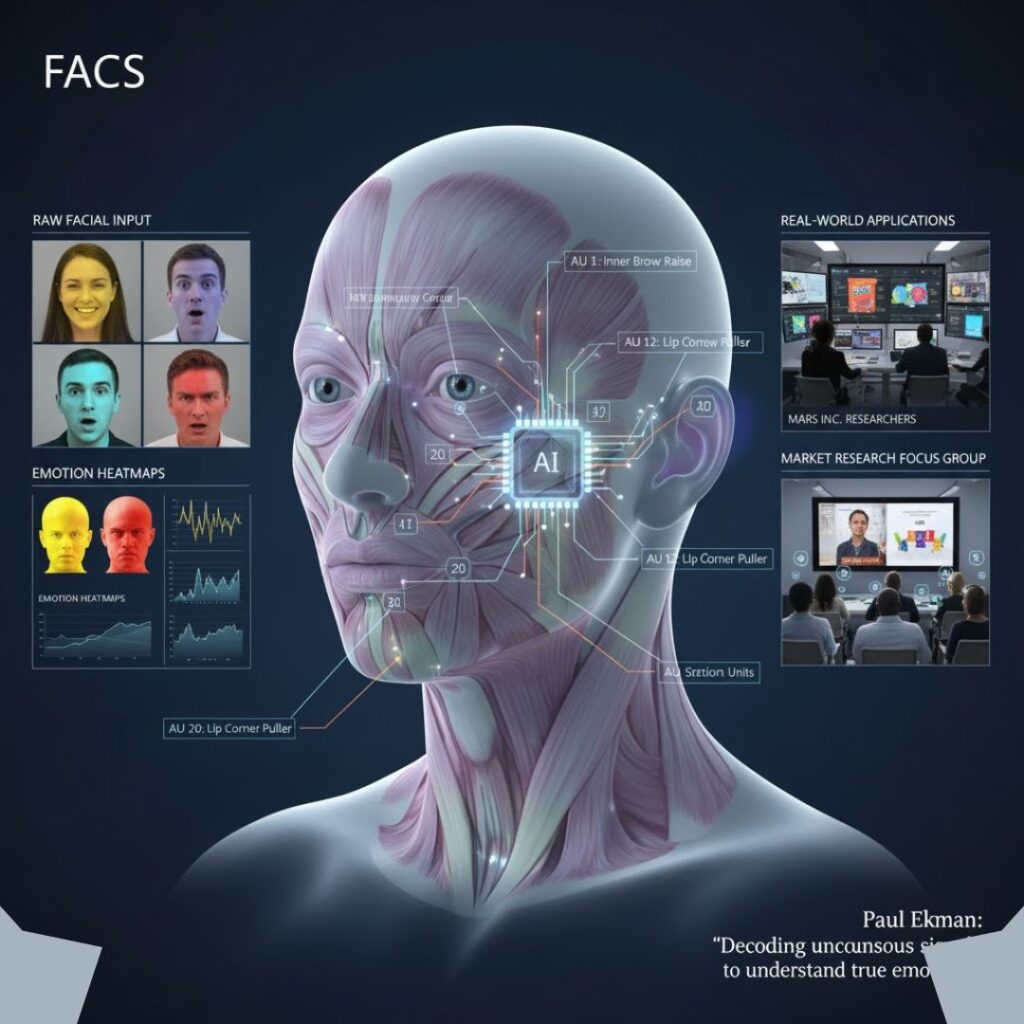

Decoding the Face: What is FACS?

To teach a machine, you first need a standardized language. In the world of emotion analysis, that language is FACS, which stands for the Facial Action Coding System. Developed by renowned psychologists Dr. Paul Ekman and Wallace Friesen, FACS is a comprehensive, objective map of every possible movement the human face can make.

Think of it this way: the English alphabet has 26 letters that combine to form every word we know. Similarly, FACS breaks down every facial expression into its core components. It’s a system based not on interpreting emotion, but on simply identifying the physical muscle movements involved.

The Building Blocks: Understanding Action Units (AUs)

The individual “letters” in the FACS alphabet are called Action Units, or AUs. Each AU corresponds to the contraction or relaxation of a specific facial muscle or a small group of muscles.

Here are a few simple examples:

- AU 1: Inner Brow Raiser

- AU 4: Brow Lowerer

- AU 12: Lip Corner Puller (the main muscle in a smile)

- AU 15: Lip Corner Depressor (often seen in sadness)

A single expression is rarely just one AU. A genuine, happy smile (known as a Duchenne smile), for example, is a combination of AU 12 (Lip Corner Puller) and AU 6 (Cheek Raiser), which causes the crow’s feet at the corners of the eyes. By focusing on this level of detail, facial action coding provides an incredibly granular and objective way to describe any expression.

From Human Expertise to Machine Learning

So, how does this detailed human system teach an AI? It’s a meticulous, multi-step process that builds a foundation of trust and accuracy.

- Creating High-Quality Training Data: The process begins with human experts who are certified in FACS. They painstakingly watch thousands of hours of videos and look at countless images, manually labeling each and every visible Action Unit, frame by frame. This creates a massive, expertly annotated dataset.

- Training the AI Model: This labeled dataset is then used to train a machine learning model. The AI analyzes the data and learns to associate the visual patterns in a face—like pixels, shapes, and textures—with the specific Action Unit labels provided by the experts.

- Connecting AUs to Emotion: Once the AI has mastered detecting AUs, it takes the final step: learning which combinations of AUs reliably correlate to the six core human emotions (happiness, sadness, anger, fear, surprise, and disgust). Because it’s built on the objective foundation of the facial action coding system, the model’s emotional interpretations are based on scientific evidence, not guesswork.

Why This Method Leads to Superior Accuracy

Using FACS as the basis for training an AI isn’t just one way to do it; it’s the gold standard for achieving accuracy. Here’s why:

- Objectivity: FACS is a standardized system that describes muscle movement, removing subjective human interpretation of emotion from the training process.

- Granularity: It allows the AI to recognize incredibly subtle changes and micro-expressions that last only a fraction of a second—movements that a casual human observer would almost certainly miss.

- Universality: FACS is based on human anatomy, making it a universally applicable system across different cultures and demographics.

Conclusion

The remarkable ability of Emotion AI to understand human feelings is built on a bedrock of scientific rigor. It’s a testament to how decades of psychological research can empower the next wave of technological innovation. By learning the universal language of the face through FACS, AI models can provide insights with a level of detail and objectivity that was once unimaginable, helping businesses connect with their customers on a more human level.

FAQs

1. What is the Facial Action Coding System (FACS)?

The Facial Action Coding System (FACS) is a comprehensive, scientifically-backed system that catalogs all human facial movements. It breaks down any facial expression into its constituent muscle movements, called Action Units (AUs), providing an objective and standardized way to measure facial activity.

2. Who created FACS?

FACS was developed by psychologists Dr. Paul Ekman and Wallace V. Friesen in the 1970s. Their research is considered foundational in the field of emotion and non-verbal communication.

3. What are Action Units in facial coding?

Action Units (AUs) are the fundamental building blocks of FACS. Each AU represents a specific movement of a facial muscle or a group of muscles. For example, raising the inner brows is one AU, and pulling the lip corners up into a smile is another. Complex expressions are formed by combining multiple AUs.

4. Why is FACS important for training AI?

FACS is crucial for training accurate Emotion AI because it provides an objective, standardized, and highly detailed framework. Using FACS for labeling training data removes human bias and allows the AI to learn the subtle muscular changes associated with emotion, leading to unparalleled precision and reliability.